Neural Networks - Part 2#

import torch

import matplotlib.pyplot as plt

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor

General Setup#

The layers in between the input and output layer are called hidden layers. There is no special meaning to this phrase; it simply indicates that these neurons are performing intermediate calculations. Deep Learning is a loosely defined term which implies that many hidden layers are being used.

The general setup consider \(L\) layers, with layers 1 and \(L\) being the input and output layers, respectiveley. Suppose that layer \(l\), for \(l=1, 2, \ldots, L\) contains \(n_l\) neurons. So \(n_1\) is the dimension of the input data.

Now suppose we have \(N\) samples of training data, $\( \left\{ x^{\{i\}} \right\}_{i=1}^N \subset \mathbb{R}^{n_1} \)\( for which there are given target outputs \)\( \left\{ y^{\{i\}} \right\}_{i=1}^N \subset \mathbb{R}^{n_L} \)$

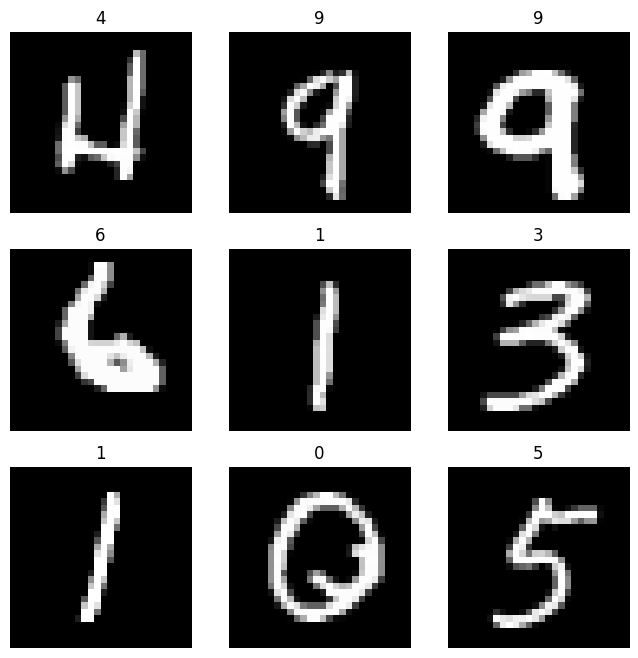

Let’s do a classification task with the same MNIST dataset but using PyTorch. Nowadyays, there are many Neural Network / Deep Learning frameworks availables, such as Tensorflow, Keras, JAX, Matlab, etc. We decided to use PyTorch since it has a really friendly user API.

First of all, we need the data. The way to access to it is different to scikit-learn, but it is essentialy the same but we load train and test sets in a separate way. Also, since the elements are images it is necessary to cast them to tensors.

training_data = datasets.MNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),

)

test_data = datasets.MNIST(

root="data",

train=False,

download=True,

transform=ToTensor(),

)

0.3%

0.7%

1.0%

1.3%

1.7%

2.0%

2.3%

2.6%

3.0%

3.3%

3.6%

4.0%

4.3%

4.6%

5.0%

5.3%

5.6%

6.0%

6.3%

6.6%

6.9%

7.3%

7.6%

7.9%

8.3%

8.6%

8.9%

9.3%

9.6%

9.9%

10.2%

10.6%

10.9%

11.2%

11.6%

11.9%

12.2%

12.6%

12.9%

13.2%

13.6%

13.9%

14.2%

14.5%

14.9%

15.2%

15.5%

15.9%

16.2%

16.5%

16.9%

17.2%

17.5%

17.9%

18.2%

18.5%

18.8%

19.2%

19.5%

19.8%

20.2%

20.5%

20.8%

21.2%

21.5%

21.8%

22.1%

22.5%

22.8%

23.1%

23.5%

23.8%

24.1%

24.5%

24.8%

25.1%

25.5%

25.8%

26.1%

26.4%

26.8%

27.1%

27.4%

27.8%

28.1%

28.4%

28.8%

29.1%

29.4%

29.8%

30.1%

30.4%

30.7%

31.1%

31.4%

31.7%

32.1%

32.4%

32.7%

33.1%

33.4%

33.7%

34.0%

34.4%

34.7%

35.0%

35.4%

35.7%

36.0%

36.4%

36.7%

37.0%

37.4%

37.7%

38.0%

38.3%

38.7%

39.0%

39.3%

39.7%

40.0%

40.3%

40.7%

41.0%

41.3%

41.7%

42.0%

42.3%

42.6%

43.0%

43.3%

43.6%

44.0%

44.3%

44.6%

45.0%

45.3%

45.6%

45.9%

46.3%

46.6%

46.9%

47.3%

47.6%

47.9%

48.3%

48.6%

48.9%

49.3%

49.6%

49.9%

50.2%

50.6%

50.9%

51.2%

51.6%

51.9%

52.2%

52.6%

52.9%

53.2%

53.6%

53.9%

54.2%

54.5%

54.9%

55.2%

55.5%

55.9%

56.2%

56.5%

56.9%

57.2%

57.5%

57.9%

58.2%

58.5%

58.8%

59.2%

59.5%

59.8%

60.2%

60.5%

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz to data/MNIST/raw/train-images-idx3-ubyte.gz

60.8%

61.2%

61.5%

61.8%

62.1%

62.5%

62.8%

63.1%

63.5%

63.8%

64.1%

64.5%

64.8%

65.1%

65.5%

65.8%

66.1%

66.4%

66.8%

67.1%

67.4%

67.8%

68.1%

68.4%

68.8%

69.1%

69.4%

69.8%

70.1%

70.4%

70.7%

71.1%

71.4%

71.7%

72.1%

72.4%

72.7%

73.1%

73.4%

73.7%

74.0%

74.4%

74.7%

75.0%

75.4%

75.7%

76.0%

76.4%

76.7%

77.0%

77.4%

77.7%

78.0%

78.3%

78.7%

79.0%

79.3%

79.7%

80.0%

80.3%

80.7%

81.0%

81.3%

81.7%

82.0%

82.3%

82.6%

83.0%

83.3%

83.6%

84.0%

84.3%

84.6%

85.0%

85.3%

85.6%

85.9%

86.3%

86.6%

86.9%

87.3%

87.6%

87.9%

88.3%

88.6%

88.9%

89.3%

89.6%

89.9%

90.2%

90.6%

90.9%

91.2%

91.6%

91.9%

92.2%

92.6%

92.9%

93.2%

93.6%

93.9%

94.2%

94.5%

94.9%

95.2%

95.5%

95.9%

96.2%

96.5%

96.9%

97.2%

97.5%

97.9%

98.2%

98.5%

98.8%

99.2%

99.5%

99.8%

100.0%

Extracting data/MNIST/raw/train-images-idx3-ubyte.gz to data/MNIST/raw

100.0%

2.0%

4.0%

6.0%

7.9%

9.9%

11.9%

13.9%

15.9%

17.9%

19.9%

21.9%

23.8%

25.8%

27.8%

29.8%

31.8%

33.8%

35.8%

37.8%

39.7%

41.7%

43.7%

45.7%

47.7%

49.7%

51.7%

53.7%

55.6%

57.6%

59.6%

61.6%

63.6%

65.6%

67.6%

69.6%

71.5%

73.5%

75.5%

77.5%

79.5%

81.5%

83.5%

85.5%

87.4%

89.4%

91.4%

93.4%

95.4%

97.4%

99.4%

100.0%

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz to data/MNIST/raw/train-labels-idx1-ubyte.gz

Extracting data/MNIST/raw/train-labels-idx1-ubyte.gz to data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz to data/MNIST/raw/t10k-images-idx3-ubyte.gz

Extracting data/MNIST/raw/t10k-images-idx3-ubyte.gz to data/MNIST/raw

100.0%

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz to data/MNIST/raw/t10k-labels-idx1-ubyte.gz

Extracting data/MNIST/raw/t10k-labels-idx1-ubyte.gz to data/MNIST/raw

training_data.classes

['0 - zero',

'1 - one',

'2 - two',

'3 - three',

'4 - four',

'5 - five',

'6 - six',

'7 - seven',

'8 - eight',

'9 - nine']

training_data.data.shape

torch.Size([60000, 28, 28])

training_data.targets.shape

torch.Size([60000])

test_data.data.shape

torch.Size([10000, 28, 28])

test_data.targets.shape

torch.Size([10000])

Let’s see some samples again.

figure = plt.figure(figsize=(8, 8))

cols, rows = 3, 3

for i in range(1, cols * rows + 1):

sample_idx = torch.randint(len(training_data), size=(1,)).item()

img, label = training_data[sample_idx]

figure.add_subplot(rows, cols, i)

plt.title(label)

plt.axis("off")

plt.imshow(img.squeeze(), cmap="gray")

plt.show()

DataLoader is a tool for handle batches and iterate over the dataset.

batch_size = 64

train_dataloader = DataLoader(training_data, batch_size=batch_size)

test_dataloader = DataLoader(test_data, batch_size=batch_size)

for X, y in test_dataloader:

print(f"Shape of X [N, C, H, W]: {X.shape}")

print(f"Shape of y: {y.shape} {y.dtype}")

break

Shape of X [N, C, H, W]: torch.Size([64, 1, 28, 28])

Shape of y: torch.Size([64]) torch.int64

Note the size of each batch, in particular X-batches are made of only one channel and 28 \(\times\) 28 pixels.

Model#

Before creating our model we need to select the device where PyTorch is going to work on. By default tensors are created on the CPU, but with the following line we can use the GPU (if it is available).

Remark: If you running this notebook on Google Colab you need to enable GPU manually. Go to Menu Menu > Runtime > Change Runtime and change hardware accelaration to GPU.

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using {device} device")

Using cpu device

To define a neural network in PyTorch, we create a class that inherits from nn.Module. We define the layers of the network in the __init__ function and specify how data will pass through the network in the forward function.

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28 * 28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10)

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork().to(device)

print(model)

NeuralNetwork(

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear_relu_stack): Sequential(

(0): Linear(in_features=784, out_features=512, bias=True)

(1): ReLU()

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)

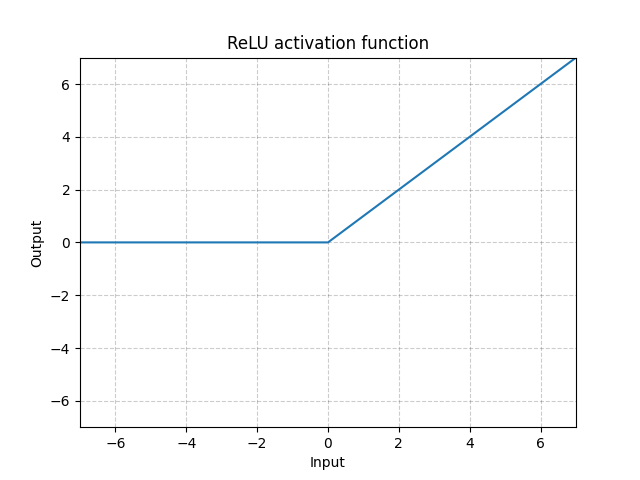

We just created a fully connected neural network made of three layers (just one hidden layer). Note the activation function is not a logistic one, but another non-linear function calle ReLU (Rectified Linear Unit) defined as

Optimization#

The quadratic cost function that we widh to minimize hast the form

where \(W\), \(b\) are the set of all the weight matrices and biases vectors, respectively.

We can minimize the cost function with gradient descent as we already saw previously. Without loss of generality, consider \(J: \mathbb{R}^s \to \mathbb{R}\) and \(\theta \in \mathbb{R}^s\), then the algorithm has the following form

where \(\alpha\) is the learning rate and the partial derivative of the cost function is a sum over the training data of individual partial derivatives, more precisely

Now, we have to choose a loss (cost) function and the optimizer.

Cross Entropy is useful when training a classification problem with several classes. In this cases, 10 digits.

Stochastic Gradient Descent with a learning rate of 0.001 (it is a standard practice to use this value as first guest).

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3)

Stochastic Gradient Descent#

When we have a large number of parameters and a large number of training points, computing the gradient vector at every iteration of the steepest descent method can be prohibitively expensive. A cheaper alternative is Stochastic Gradient Descent which has several variations, one of the most commons is to take a smaller set of training data in each iteration. For some \(m \ll N\) each iteration has the following form:

Choose \(m\) integers, \(k_1, k_2, \ldots, k_m\) uniformly at random from \(\{1, 2, \ldots, N\}\).

Update $\( \theta \to \theta - \alpha \frac{1}{m} \sum_{i=1}^m \nabla C_{x^{\{k_i\}}}(\theta) \)$

In this iteration, the set \(\{ x^{\{k_i\}} \}_{i=1}^k\) is known as a minibatch. There is a without replacement alternative where, assuming \(N = Km\) for some \(K\), we split the training set randomly into \(K\) distinct minibatches and cycle through them.

Back Propagation#

Our task is to compute partial derivatives of the cost function with respect to each element of \(W^{[l]}\) and \(b^{[l]}\). We therefore focus our attention on computing those individual partial derivatives.

Hence, for a fixed \(i\)-th training sample we want to compute the derivatives of \(C_{x^{\{i\}}}\), so we may drop the dependence on \(x^{\{i\}}\) and simply write

It is useful introduce two further sets of variables. First we let $\( z^{[l]} = W^{[l]} a^{[l-1]} + b^{[l]} \in \mathbb{R}^{n_l} \quad \text{for} \; l=2, 3, \ldots, L. \)\( where \)z_j^{[l]}\(, the \)j\(-th component of \)z_j^{[l]}\( is the _weighted input_ for neuron \)j\( at layer \)l$.

Secondly, $\( \delta_j^{[l]} = \dfrac{\partial C}{\partial z_j^{[l]}} \in \mathbb{R}^{n_l} \quad \text{for} \; 1 \leq j \leq n_l \quad \text{and} \quad 2 \leq l \leq L \)$

The output \(a^{[L]}\) can be evaluated from a forward pass through the network, computing in order $\( a^{[1]}, z^{[2]}, a^{[2]}, z^{[3]}, a^{[3]}, \ldots, z^{[L]}, a^{[L]} \)\( Then, \)\( \delta^{[L-1]}, \delta^{[L-2]}, \ldots, \delta^{[2]} \)\( can be computed in backward pass such that \)\( \dfrac{\partial C}{\partial W^{[l]}_{j, k}} = \delta_j^{[l]} a_k^{[l-1]} \quad \text{and} \quad \dfrac{\partial C}{\partial \beta^{[l]}_j} = \delta^{[l]}_j \quad \text{for} \; 1 \leq j, k \leq n_l \quad \text{and} \quad 2 \leq l \leq L \)$

Computing gradients in this way is known as back propagation.

Another good resource for understand back propagation is this website.

In a single training loop, the model makes predictions on the training dataset (fed to it in batches), and backpropagates the prediction error to adjust the model’s parameters.

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

model.train()

for batch, (X, y) in enumerate(dataloader):

X, y = X.to(device), y.to(device)

# Compute prediction error

pred = model(X)

loss = loss_fn(pred, y)

# Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

We also check the model’s performance against the test dataset to ensure it is learning.

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

model.eval()

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

X, y = X.to(device), y.to(device)

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")

Finally, we have to decide the number of epochs and just train the data. Sometimes epochs and batch size confuse people.

The batch size is a hyperparameter of gradient descent that controls the number of training samples to work through before the model’s internal parameters are updated.

The number of epochs is a hyperparameter of gradient descent that controls the number of complete passes through the training dataset.

epochs = 5

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train(train_dataloader, model, loss_fn, optimizer)

test(test_dataloader, model, loss_fn)

print("Done!")

Epoch 1

-------------------------------

loss: 2.309290 [ 0/60000]

loss: 2.307942 [ 6400/60000]

loss: 2.297043 [12800/60000]

loss: 2.293312 [19200/60000]

loss: 2.305474 [25600/60000]

loss: 2.298270 [32000/60000]

loss: 2.272187 [38400/60000]

loss: 2.281242 [44800/60000]

loss: 2.270520 [51200/60000]

loss: 2.258932 [57600/60000]

Test Error:

Accuracy: 36.3%, Avg loss: 2.265666

Epoch 2

-------------------------------

loss: 2.263234 [ 0/60000]

loss: 2.260225 [ 6400/60000]

loss: 2.263754 [12800/60000]

loss: 2.237270 [19200/60000]

loss: 2.261443 [25600/60000]

loss: 2.253385 [32000/60000]

loss: 2.221959 [38400/60000]

loss: 2.243794 [44800/60000]

loss: 2.216282 [51200/60000]

loss: 2.204513 [57600/60000]

Test Error:

Accuracy: 54.8%, Avg loss: 2.209960

Epoch 3

-------------------------------

loss: 2.202790 [ 0/60000]

loss: 2.196324 [ 6400/60000]

loss: 2.214802 [12800/60000]

loss: 2.156578 [19200/60000]

loss: 2.196867 [25600/60000]

loss: 2.185695 [32000/60000]

loss: 2.140753 [38400/60000]

loss: 2.179746 [44800/60000]

loss: 2.127896 [51200/60000]

loss: 2.111852 [57600/60000]

Test Error:

Accuracy: 62.8%, Avg loss: 2.116520

Epoch 4

-------------------------------

loss: 2.102810 [ 0/60000]

loss: 2.090307 [ 6400/60000]

loss: 2.130004 [12800/60000]

loss: 2.022978 [19200/60000]

loss: 2.085523 [25600/60000]

loss: 2.068079 [32000/60000]

loss: 1.998628 [38400/60000]

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

Cell In[15], line 4

2 for t in range(epochs):

3 print(f"Epoch {t+1}\n-------------------------------")

----> 4 train(train_dataloader, model, loss_fn, optimizer)

5 test(test_dataloader, model, loss_fn)

6 print("Done!")

Cell In[13], line 4, in train(dataloader, model, loss_fn, optimizer)

2 size = len(dataloader.dataset)

3 model.train()

----> 4 for batch, (X, y) in enumerate(dataloader):

5 X, y = X.to(device), y.to(device)

7 # Compute prediction error

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/utils/data/dataloader.py:633, in _BaseDataLoaderIter.__next__(self)

630 if self._sampler_iter is None:

631 # TODO(https://github.com/pytorch/pytorch/issues/76750)

632 self._reset() # type: ignore[call-arg]

--> 633 data = self._next_data()

634 self._num_yielded += 1

635 if self._dataset_kind == _DatasetKind.Iterable and \

636 self._IterableDataset_len_called is not None and \

637 self._num_yielded > self._IterableDataset_len_called:

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/utils/data/dataloader.py:677, in _SingleProcessDataLoaderIter._next_data(self)

675 def _next_data(self):

676 index = self._next_index() # may raise StopIteration

--> 677 data = self._dataset_fetcher.fetch(index) # may raise StopIteration

678 if self._pin_memory:

679 data = _utils.pin_memory.pin_memory(data, self._pin_memory_device)

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py:51, in _MapDatasetFetcher.fetch(self, possibly_batched_index)

49 data = self.dataset.__getitems__(possibly_batched_index)

50 else:

---> 51 data = [self.dataset[idx] for idx in possibly_batched_index]

52 else:

53 data = self.dataset[possibly_batched_index]

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py:51, in <listcomp>(.0)

49 data = self.dataset.__getitems__(possibly_batched_index)

50 else:

---> 51 data = [self.dataset[idx] for idx in possibly_batched_index]

52 else:

53 data = self.dataset[possibly_batched_index]

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torchvision/datasets/mnist.py:145, in MNIST.__getitem__(self, index)

142 img = Image.fromarray(img.numpy(), mode="L")

144 if self.transform is not None:

--> 145 img = self.transform(img)

147 if self.target_transform is not None:

148 target = self.target_transform(target)

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torchvision/transforms/transforms.py:137, in ToTensor.__call__(self, pic)

129 def __call__(self, pic):

130 """

131 Args:

132 pic (PIL Image or numpy.ndarray): Image to be converted to tensor.

(...)

135 Tensor: Converted image.

136 """

--> 137 return F.to_tensor(pic)

File /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torchvision/transforms/functional.py:174, in to_tensor(pic)

172 img = img.permute((2, 0, 1)).contiguous()

173 if isinstance(img, torch.ByteTensor):

--> 174 return img.to(dtype=default_float_dtype).div(255)

175 else:

176 return img

KeyboardInterrupt:

Sources: