System of Differential Equations#

# !pip install deepxde # Run this line if you are in Google Colab

Let’s motivate this section with the Lotka-Volterra equations, also known as the predator-prey equations, are a pair of first-order nonlinear differential equations, frequently used to describe the dynamics of biological systems in which two species interact. The populations change through time according to the pair of equations: $\( \begin{align*} \dfrac{dx}{dt} &= \alpha x - \beta xy \\ \dfrac{dy}{dt} &= -\gamma y + \delta xy \end{align*} \)$ where

\(x\) is the number of prey;

\(y\) is the number of some predator;

\({\tfrac {dy}{dt}}\) and \({\tfrac {dx}{dt}}\) represent the instantaneous growth rates of the two populations;

\(t\) represents time;

\(\alpha\), \(\beta\), \(\gamma\) and \(\delta\) are positive real parameters describing the interaction of the two species.

We will need the following packages, numpy for array operations, matplotlib for visualizations and scipy for getting for getting a numerical solution with Runge-Kutta method.

import numpy as np

import matplotlib.pyplot as plt

from scipy.integrate import solve_ivp

For this module we will use the following values of \(\alpha\), \(\beta\), \(\gamma\) and \(\delta\).

alpha = 2 / 3

beta = 4 / 3

gamma = 1

delta = 1

It is important to decide a time invertal where we will working on. As an example let’s consider between \(t=0\) and \(t=1\). As well as initial conditions \(x(0)\) and \(y(0)\).

t_initial = 0

t_final = 10

x0 = 1.2

y0 = 0.8

Runge-Kutta#

Note we can modularize this step creating a function, for instance, runge_kutta which takes as input an array of time steps, initial conditions and the values of the parameters that describe the interaction between the two species.

def runge_kutta(

t,

x0,

y0,

alpha,

beta,

gamma,

delta

):

def func(t, Y):

x, y = Y

dx_dt = alpha * x - beta * x * y

dy_dt = - gamma * y + delta * x * y

return dx_dt, dy_dt

Y0 = [x0, y0]

t_span = (t[0], t[-1])

sol = solve_ivp(func, t_span, Y0, t_eval=t)

x_true, y_true = sol.y

return x_true, y_true

Now, let’s generate an time array and solve the initial value problem.

t_array = np.linspace(t_initial, t_final, 100)

x_rungekutta, y_rungekutta = runge_kutta(t_array, x0, y0, alpha, beta, gamma, delta)

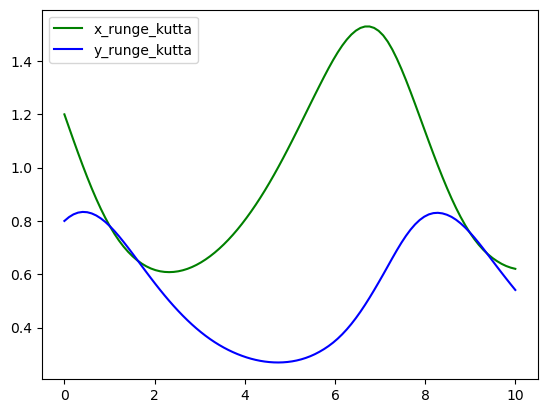

We suggest you to plot your results since it can give you better insights of your simulations or predictions.

plt.plot(t_array, x_rungekutta, color="green", label="x_runge_kutta")

plt.plot(t_array, y_rungekutta, color="blue", label="y_runge_kutta")

plt.legend()

plt.show()

Physics-Informed Neural Networks#

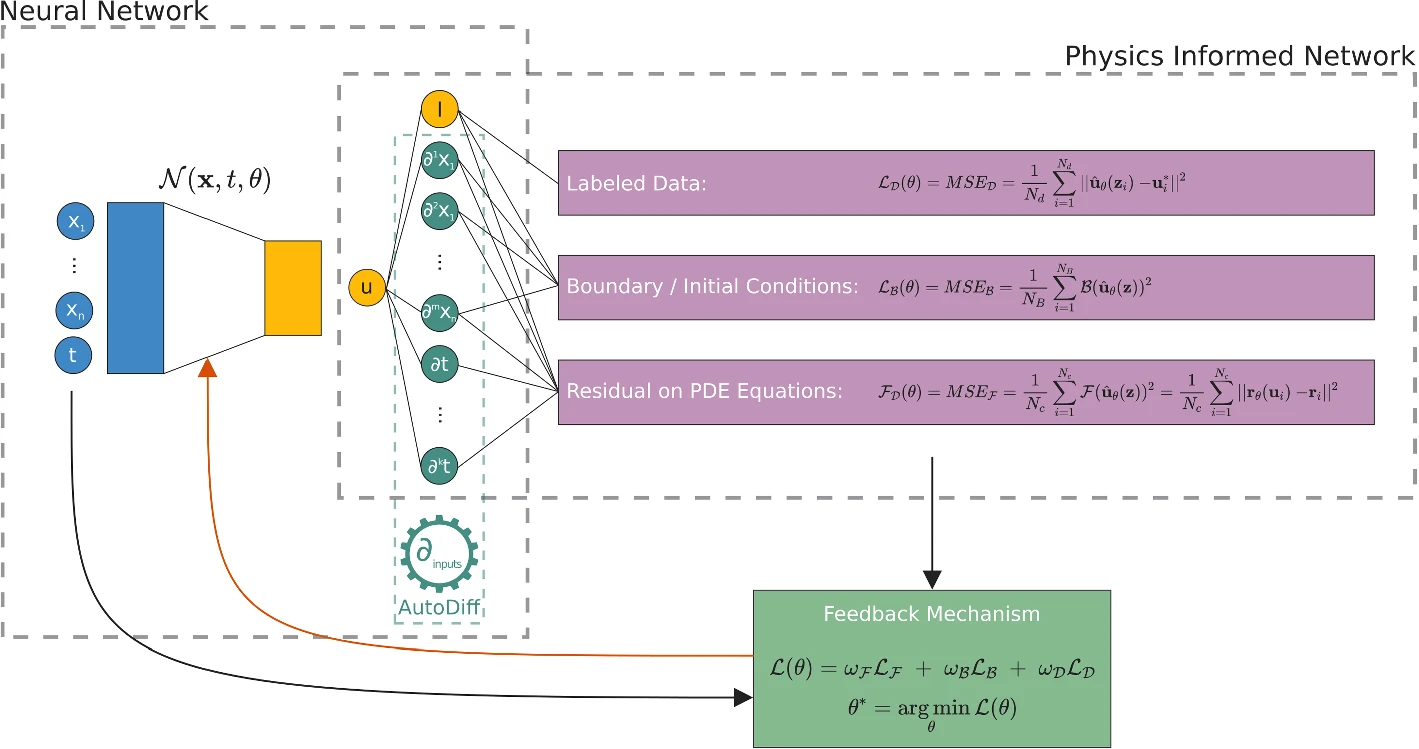

For years mathematicians and physicists are trying to model the world with differential equations. However, since the advent of techniques such as machine learning, neural networks and deep learning together with greater computing power, community has speculated that we could learn automatically (algorithms) anything with a enough amount of data. However, it seems this is not really true. In 2019, Raissi, Perdikaris and Karniadakis introduced Physics-Informed Neural Networks (PINNs), neural networks that are trained to solve supervised learning tasks while respecting any given law of physics described by general nonlinear partial differential equations (source). PINNs are nowadays used to solve PDEs, fractional equations, and integral-differential equations. PINNs approximate PDE solutions by training a neural network to minimize a loss function, including:

Initial and boundary conditions along the space-time domain’s boundary

PDE residual at selected points in the domain.

If you want to do a simplified analogy, initial and boundary conditions points will be an usual training dataset, but also it is necessary to embed physical laws (PDE) into the neural network. PINNs can solve differential equations expressed, in the most general form, like:

defined on the domain \(\Omega \subset \mathbb{R}^d\) with the boundary \(\partial \Omega\). Where

\(z := (x_1, x_2, \ldots, t)^\top\) indicated the space-time coordinate vector,

\(u\) the unknown function,

\(\lambda\) the parameters related to the physics,

\(\mathcal{F}\) the non-linear differential operator,

\(f\) the function identifying the data of the problem,

\(\mathcal{B}\) the operator indicating arbitrary initial or boundary conditions, and

\(g\) the boundary function.

In the PINN methodology, \(u(z)\) is computationally predicted by a NN, parameterized by a set of parameters \(\theta\), giving rise to an approximation $\( \hat{u}_\theta(z) \approx u(z) \)$

The optimization problem we want to deal with it is

this is three weighted loss functions, each one depending on

\(\mathcal{L}_\mathcal{F}\), differential equation,

\(\mathcal{L}_\mathcal{B}\), boundary conditions, and

\(\mathcal{L}_{\text{data}}\), (eventually) some known data.

PINNs for solving ODEs#

For our Lotka-Volterra system the solution \(u\) will be a vector such that $\( u(t) = (x(t), y(t))^\top \)$ and there are not boundary conditions, only initial conditions.

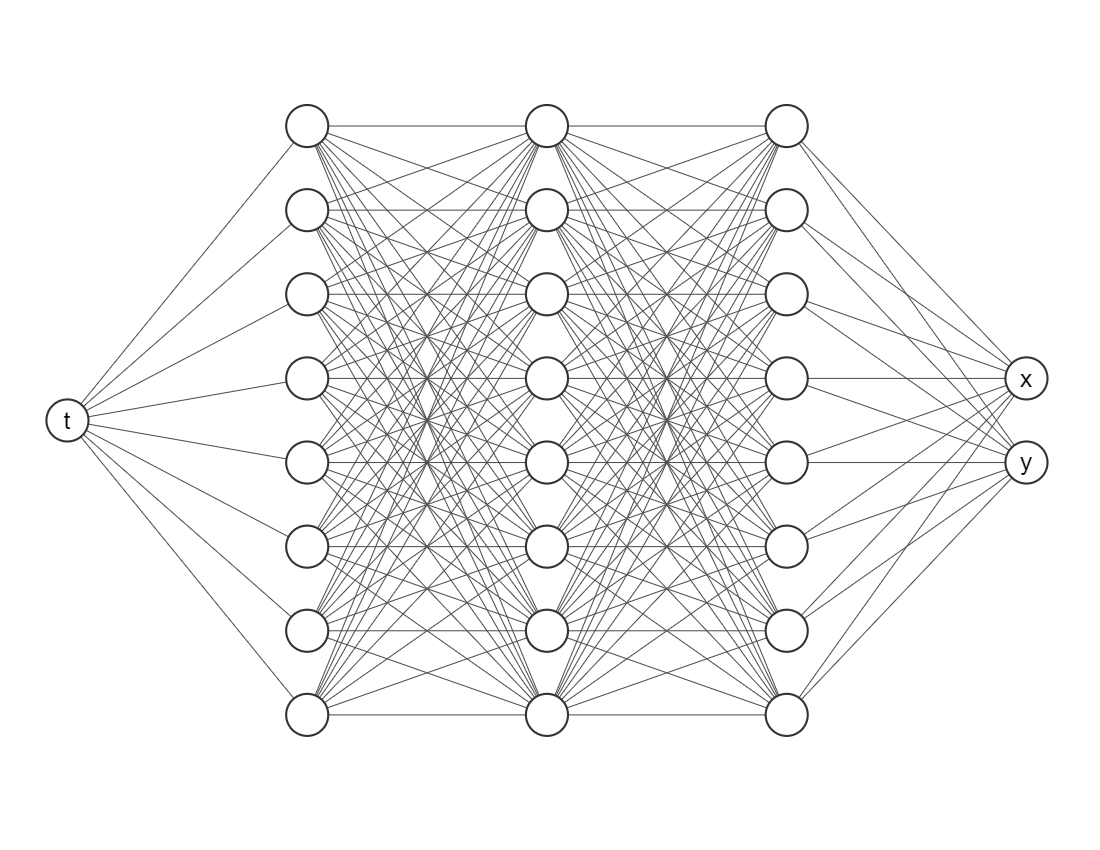

We want to train a network that looks like this

The most important package is deepxde, which allows us to implement Physic-Informed Neural Networks approaches with a few lines of code.

import deepxde as dde

from deepxde.backend import tf

Using backend: tensorflow.compat.v1

2023-05-29 15:08:22.805541: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F AVX512_VNNI AVX512_BF16 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-05-29 15:08:22.876404: I tensorflow/core/util/port.cc:104] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2023-05-29 15:08:23.242926: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: :/home/alonsolml/mambaforge/envs/nc-book/lib/

2023-05-29 15:08:23.244527: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: :/home/alonsolml/mambaforge/envs/nc-book/lib/

2023-05-29 15:08:23.244535: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

WARNING:tensorflow:From /home/alonsolml/mambaforge/envs/nc-book/lib/python3.10/site-packages/tensorflow/python/compat/v2_compat.py:107: disable_resource_variables (from tensorflow.python.ops.variable_scope) is deprecated and will be removed in a future version.

Instructions for updating:

non-resource variables are not supported in the long term

Enable just-in-time compilation with XLA.

2023-05-29 15:08:23.905929: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:23.918928: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:23.918972: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

WARNING:tensorflow:From /home/alonsolml/mambaforge/envs/nc-book/lib/python3.10/site-packages/deepxde/nn/initializers.py:118: The name tf.keras.initializers.he_normal is deprecated. Please use tf.compat.v1.keras.initializers.he_normal instead.

ODE Residuals#

Since we are trying to embed the physics onto the neural networks we need to define

def ode(t, Y):

x = Y[:, 0:1]

y = Y[:, 1:2]

dx_dt = dde.grad.jacobian(Y, t, i=0)

dy_dt = dde.grad.jacobian(Y, t, i=1)

return [

dx_dt - alpha * x + beta * x * y,

dy_dt + gamma * y - delta * x * y

]

Here t is the indepent variable and Y is an array with two columns (since our system considers two equations). To define the first derivative is as easy as using dde.grad.jacobian, just be sure about the component i, in this case we decided i=0 corresponds to the variable \(x(t)\) and i=1 to \(y(t)\).

Initial conditions#

And now we need to declare this element for our neural network, if not, the algorithm wouldn’t know where to make the estimations.

geom = dde.geometry.TimeDomain(t_initial, t_final)

Then we have to create a function for defining boundaries, since our geometry it is only on time we will use the default one, don’t worry about it.

def boundary(_, on_initial):

return on_initial

And then we have to tell to our algorithm these are the initial conditions for the learning process

ic_x = dde.icbc.IC(geom, lambda x: x0, boundary, component=0)

ic_y = dde.icbc.IC(geom, lambda x: y0, boundary, component=1)

Data object#

Everything related to the differential equations and initial conditions has to be inside a new object dde.data.PDE (do not worry, it also consider systems of ordinary differential equations).

data = dde.data.PDE(

geom,

ode,

[ic_x, ic_y],

num_domain=512,

num_boundary=2

)

In order to test our model we need more points, we considered 512 points inside our domain with num_domain=512. Finally, since we are working on a time domain there are only two points in its boundary (num_boundary=2).

Neural Network#

It is time for choosing a neural network architecture. For simplicity, we will use a Fully-connected neural network (`dde.nn.FNN’). The most important things are:

Input layer (the first one) needs only one node/neuron since our indepent variable is only time \(t\).

The output layer (the last one) nneds two nodes/neurons since we are working on a system of two equations.

Do not worry so much about the amount of layers or neurons in each hidden layer, as a rule of thumb error should decrease while you add more layers and neurons, but it will take more computational time. Activation functions and the initializer are more parameters the user must choose, usually Glorot normal works well as initializer. However, we would recommend you to try different activation functions, for example, relu, sigmoid or swish.

neurons = 64

layers = 6

activation = "tanh"

initializer = "Glorot normal"

net = dde.nn.FNN([1] + [neurons] * layers + [2], activation, initializer)

Model Object#

The library we are working with needs put everything together in a new object, but it is just one line of code.

model = dde.Model(data, net)

Training#

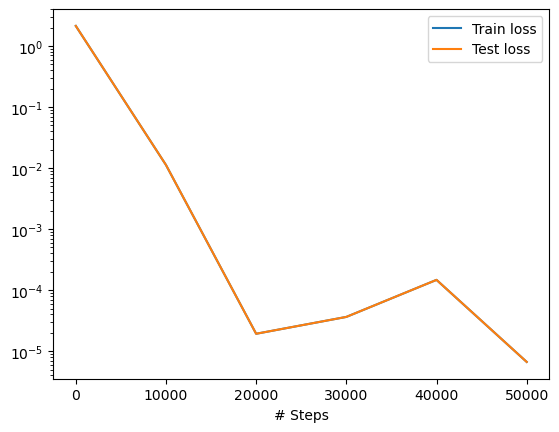

For training we will go with an Adam optimizer (a very popular one nowadays) and a learning rate of 0.001 (smaller learning rates may give you better results but it will take many more iterations).

Just for simplicity we will take 50000 iterations, but another rule of thumb it is that as you increase the number of iterations the loss value should decrease as well.

model.compile("adam", lr=0.001)

losshistory, train_state = model.train(iterations=50000, display_every=10000)

Compiling model...

Building feed-forward neural network...

'build' took 0.040949 s

/home/alonsolml/mambaforge/envs/nc-book/lib/python3.10/site-packages/deepxde/nn/tensorflow_compat_v1/fnn.py:103: UserWarning: `tf.layers.dense` is deprecated and will be removed in a future version. Please use `tf.keras.layers.Dense` instead.

return tf.layers.dense(

2023-05-29 15:08:24.653036: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F AVX512_VNNI AVX512_BF16 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-05-29 15:08:24.653995: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:24.654116: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:24.654181: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:25.093244: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:25.093508: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:25.093517: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1700] Could not identify NUMA node of platform GPU id 0, defaulting to 0. Your kernel may not have been built with NUMA support.

2023-05-29 15:08:25.093553: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2023-05-29 15:08:25.093564: W tensorflow/core/common_runtime/gpu/gpu_bfc_allocator.cc:42] Overriding orig_value setting because the TF_FORCE_GPU_ALLOW_GROWTH environment variable is set. Original config value was 0.

2023-05-29 15:08:25.093584: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1613] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 7369 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3080, pci bus id: 0000:01:00.0, compute capability: 8.6

'compile' took 0.711830 s

Initializing variables...

Training model...

2023-05-29 15:08:25.329737: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:357] MLIR V1 optimization pass is not enabled

2023-05-29 15:08:25.401502: I tensorflow/compiler/xla/service/service.cc:173] XLA service 0x7fc90c00d960 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:

2023-05-29 15:08:25.401537: I tensorflow/compiler/xla/service/service.cc:181] StreamExecutor device (0): NVIDIA GeForce RTX 3080, Compute Capability 8.6

2023-05-29 15:08:25.405898: I tensorflow/compiler/mlir/tensorflow/utils/dump_mlir_util.cc:268] disabling MLIR crash reproducer, set env var `MLIR_CRASH_REPRODUCER_DIRECTORY` to enable.

2023-05-29 15:08:25.559289: I tensorflow/tsl/platform/default/subprocess.cc:304] Start cannot spawn child process: Permission denied

2023-05-29 15:08:25.632490: I tensorflow/compiler/jit/xla_compilation_cache.cc:477] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process.

Step Train loss Test loss Test metric

0 [9.65e-04, 5.28e-02, 1.44e+00, 6.40e-01] [9.65e-04, 5.28e-02, 1.44e+00, 6.40e-01] []

2023-05-29 15:08:26.030817: I tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:630] TensorFloat-32 will be used for the matrix multiplication. This will only be logged once.

10000 [8.89e-03, 2.23e-03, 2.29e-05, 1.08e-04] [8.89e-03, 2.23e-03, 2.29e-05, 1.08e-04] []

20000 [1.37e-05, 4.01e-06, 3.52e-07, 1.26e-06] [1.37e-05, 4.01e-06, 3.52e-07, 1.26e-06] []

30000 [2.22e-05, 8.82e-06, 3.31e-06, 2.08e-06] [2.22e-05, 8.82e-06, 3.31e-06, 2.08e-06] []

40000 [1.32e-04, 9.52e-06, 1.45e-06, 4.53e-06] [1.32e-04, 9.52e-06, 1.45e-06, 4.53e-06] []

50000 [4.34e-06, 1.70e-06, 4.41e-07, 1.91e-07] [4.34e-06, 1.70e-06, 4.41e-07, 1.91e-07] []

Best model at step 50000:

train loss: 6.67e-06

test loss: 6.67e-06

test metric: []

'train' took 65.769597 s

We can plot the loss history with a simple command.

dde.utils.external.plot_loss_history(losshistory)

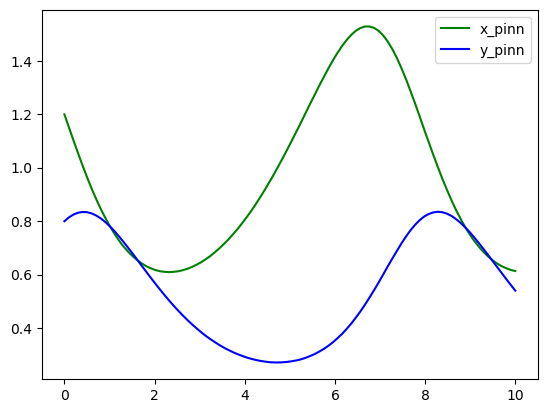

Now, the prediction with PINNs

pinn_pred = model.predict(t_array.reshape(-1, 1))

x_pinn = pinn_pred[:, 0:1]

y_pinn = pinn_pred[:, 1:2]

plt.plot(t_array, x_pinn, color="green", label="x_pinn")

plt.plot(t_array, y_pinn, color="blue", label="y_pinn")

plt.legend()

plt.show()

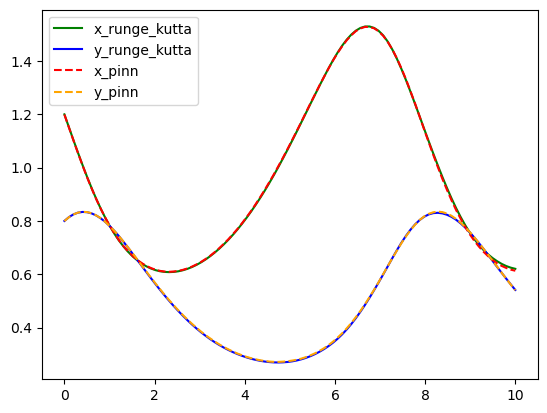

As you can see both algorithms gave us almost identical results. One of the pros that we would like to point out about Physics-Informed neural networks is that for more complex systems you only need to change a very few things more, specifically residuals. Most of the numerical work is done automatically by machine learning libraries as TensorFlow, Torch, JAX, etc. so it is easy to scale it up, even better when we can take advantage of GPUs. For you, as an user, your challenge will be in pick suitable hyper-parameters (number of layers, number of neurons, activation function, number of iterations, etc.) but this also could be done by other algorithms, however these are out of the scope of this lesson.

Finally, we can compare both models and notice they are practically the same curves.

plt.plot(t_array, x_rungekutta, color="green", label="x_runge_kutta")

plt.plot(t_array, y_rungekutta, color="blue", label="y_runge_kutta")

plt.plot(t_array, x_pinn, color="red", linestyle="dashed", label="x_pinn")

plt.plot(t_array, y_pinn, color="orange", linestyle="dashed", label="y_pinn")

plt.legend()

plt.show()